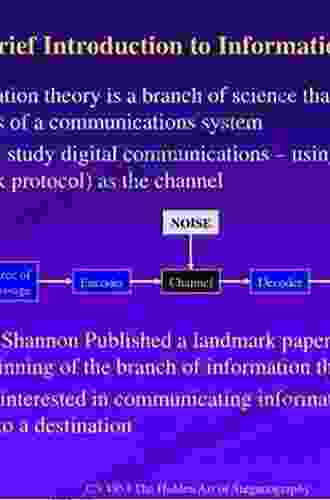

An Introduction to Information Theory

Information theory is a branch of mathematics that deals with the quantification, storage, and transmission of information. It is a fundamental tool for understanding communication systems, data compression, and cryptography.

4.4 out of 5

| Language | : | English |

| File size | : | 6969 KB |

| Text-to-Speech | : | Enabled |

| Enhanced typesetting | : | Enabled |

| Word Wise | : | Enabled |

| Print length | : | 469 pages |

| Lending | : | Enabled |

| Screen Reader | : | Supported |

One of the most important concepts in information theory is entropy. Entropy is a measure of the amount of uncertainty in a message. The higher the entropy, the more uncertain the message. For example, a message that says "It will rain tomorrow" has less entropy than a message that says "It may or may not rain tomorrow".

Another important concept in information theory is channel capacity. Channel capacity is a measure of the maximum amount of information that can be transmitted over a communication channel. The channel capacity is determined by factors such as the bandwidth and noise level of the channel.

Information theory has a wide range of applications in areas such as:

- Communication systems: Information theory is used to design communication systems that can efficiently transmit information over noisy channels.

- Data compression: Information theory is used to develop data compression techniques that can reduce the size of files without losing any information.

- Cryptography: Information theory is used to develop cryptographic techniques that can protect information from unauthorized access.

The Shannon Entropy Formula

The Shannon entropy formula is a mathematical formula that measures the entropy of a message. The formula is given by:

H(X) = -Σp(x) log₂p(x)

where:

- H(X) is the entropy of the message

- p(x) is the probability of the symbol x occurring

- log₂ is the logarithm base 2

The Shannon entropy formula can be used to calculate the entropy of any message. For example, the entropy of a message that consists of two symbols, A and B, with equal probabilities is 1 bit.

The Channel Capacity Theorem

The channel capacity theorem is a mathematical theorem that states that the maximum amount of information that can be transmitted over a communication channel is given by:

C = W log₂(1 + S/N)

where:

- C is the channel capacity

- W is the bandwidth of the channel

- S is the signal power

- N is the noise power

The channel capacity theorem can be used to design communication systems that can achieve the maximum possible data rate.

Applications of Information Theory

Information theory has a wide range of applications in areas such as:

- Communication systems: Information theory is used to design communication systems that can efficiently transmit information over noisy channels.

- Data compression: Information theory is used to develop data compression techniques that can reduce the size of files without losing any information.

- Cryptography: Information theory is used to develop cryptographic techniques that can protect information from unauthorized access.

- Biology: Information theory is used to study the transmission of information in biological systems, such as DNA and RNA.

- Economics: Information theory is used to study the flow of information in economic systems.

Information theory is a fundamental tool for understanding communication systems, data compression, and cryptography. It is a rapidly growing field with a wide range of applications. As the amount of information in the world continues to grow, information theory will become increasingly important.

Image credits:

- Information theory by Wikipedia user Benjah-bmm27 is licensed under the Creative Commons Attribution-Share Alike 3.0 Unported license.

- Shannon entropy example by Wikipedia user Benjah-bmm27 is licensed under the Creative Commons Attribution-Share Alike 3.0 Unported license.

- Channel capacity example by Wikipedia user Benjah-bmm27 is licensed under the Creative Commons Attribution-Share Alike 3.0 Unported license.

4.4 out of 5

| Language | : | English |

| File size | : | 6969 KB |

| Text-to-Speech | : | Enabled |

| Enhanced typesetting | : | Enabled |

| Word Wise | : | Enabled |

| Print length | : | 469 pages |

| Lending | : | Enabled |

| Screen Reader | : | Supported |

Do you want to contribute by writing guest posts on this blog?

Please contact us and send us a resume of previous articles that you have written.

Fiction

Fiction Non Fiction

Non Fiction Romance

Romance Mystery

Mystery Thriller

Thriller SciFi

SciFi Fantasy

Fantasy Horror

Horror Biography

Biography Selfhelp

Selfhelp Business

Business History

History Classics

Classics Poetry

Poetry Childrens

Childrens Young Adult

Young Adult Educational

Educational Cooking

Cooking Travel

Travel Lifestyle

Lifestyle Spirituality

Spirituality Health

Health Fitness

Fitness Technology

Technology Science

Science Arts

Arts Crafts

Crafts DIY

DIY Gardening

Gardening Petcare

Petcare 1st Ed 2016 Edition Kindle Edition

1st Ed 2016 Edition Kindle Edition Christopher Lakeman

Christopher Lakeman Joe Chilson

Joe Chilson Theresa Cheung

Theresa Cheung Patricia Stevens

Patricia Stevens Leonard Pellman

Leonard Pellman Steve Garnett

Steve Garnett Bonnie Scott

Bonnie Scott George W E Nickelsburg

George W E Nickelsburg Jack M Bloom

Jack M Bloom Barnett Rich

Barnett Rich Nancy Keene

Nancy Keene Kate Usher

Kate Usher Clint Malarchuk

Clint Malarchuk Patricia S Potter Efron

Patricia S Potter Efron Lj Rivers

Lj Rivers Janet Sasson Edgette

Janet Sasson Edgette Chris Froome

Chris Froome Chase Williams

Chase Williams Mimi Lemay

Mimi Lemay Edward Rosenfeld

Edward Rosenfeld David Cheng

David Cheng Justin Bower

Justin Bower Claudio De Castro

Claudio De Castro Manhattan Prep

Manhattan Prep 3rd Ed Edition Kindle Edition

3rd Ed Edition Kindle Edition Allan Mundsack

Allan Mundsack Rashad Jennings

Rashad Jennings John Gribbin

John Gribbin Richard Ania

Richard Ania Debra Pascali Bonaro

Debra Pascali Bonaro Patricia Moore Pastides

Patricia Moore Pastides Ben Egginton

Ben Egginton L Ulloque

L Ulloque Gayle Jervis

Gayle Jervis Martin Mobraten

Martin Mobraten Jessica Shortall

Jessica Shortall Roselyn Teukolsky

Roselyn Teukolsky William A Dembski

William A Dembski Miko Flohr

Miko Flohr Maureen Johnson

Maureen Johnson Carol Stock Kranowitz

Carol Stock Kranowitz Jasper Godwin Ridley

Jasper Godwin Ridley Z Justin Ren

Z Justin Ren Christopher Mcdougall

Christopher Mcdougall Claire Baker

Claire Baker Monica Sorrenson

Monica Sorrenson Gregory A Boyd

Gregory A Boyd Alice Ginott

Alice Ginott Mitch Rubman

Mitch Rubman Stewart Smith

Stewart Smith Robert K Tyson

Robert K Tyson Nnedi Okorafor

Nnedi Okorafor Aly Madhavji

Aly Madhavji Evan Brashier

Evan Brashier Justin Doyle

Justin Doyle Katie J Trent

Katie J Trent Katie M John

Katie M John Jamaica Stevens

Jamaica Stevens Mark Hatmaker

Mark Hatmaker Dr Katayune Kaeni

Dr Katayune Kaeni Josh Elster

Josh Elster Jenifer Fox

Jenifer Fox 50minutes Com

50minutes Com Hong Chen

Hong Chen A Christine Harris

A Christine Harris Marisha Pessl

Marisha Pessl Jamie Hand

Jamie Hand Tom Pyszczynski

Tom Pyszczynski Elaine Beaumont

Elaine Beaumont Prerna Lal

Prerna Lal Steve Magness

Steve Magness Eloise Jarvis Mcgraw

Eloise Jarvis Mcgraw John A Yoegel

John A Yoegel Dan Blackburn

Dan Blackburn Neal Bascomb

Neal Bascomb Jeffrey A Greene

Jeffrey A Greene Sloane Mcclain

Sloane Mcclain Josh Mulvihill

Josh Mulvihill Dr Danny Penman

Dr Danny Penman Patrick Lange

Patrick Lange Dave Gerr

Dave Gerr Robert Mcentarffer

Robert Mcentarffer Pamela Adams

Pamela Adams 50minutos Es

50minutos Es J D Salinger

J D Salinger Gary S Thorpe

Gary S Thorpe J Morgan Mcgrady

J Morgan Mcgrady John Kreiter

John Kreiter Amy Ogle

Amy Ogle Louis Liebenberg

Louis Liebenberg Tim R Wolf

Tim R Wolf Peter Wohlleben

Peter Wohlleben Laurie Forest

Laurie Forest Yan Shen

Yan Shen James Diego Vigil

James Diego Vigil Marie Louise Von Franz

Marie Louise Von Franz Ziemowit Wojciechowski

Ziemowit Wojciechowski Gail Craswell

Gail Craswell Janetti Marotta

Janetti Marotta Patrick E Mcgovern

Patrick E Mcgovern Leslie Leyland Fields

Leslie Leyland Fields Cindy Kennedy

Cindy Kennedy Lucas Whitecotton

Lucas Whitecotton Robert A Johnson

Robert A Johnson Shelby Hailstone Law

Shelby Hailstone Law Al Ford

Al Ford Lars Behnke

Lars Behnke Brian Thompson

Brian Thompson John Collins

John Collins Christy Jordan

Christy Jordan Buddy Martin

Buddy Martin David Kushner

David Kushner Miranda Castro

Miranda Castro 8th Edition Kindle Edition

8th Edition Kindle Edition Geri Ann Galanti

Geri Ann Galanti Nate G Hilger

Nate G Hilger Micah Goodman

Micah Goodman Chris I Naylor

Chris I Naylor Temple Grandin

Temple Grandin Max Domi

Max Domi Glenn N Levine

Glenn N Levine Andy Charalambous

Andy Charalambous Jeff Gill

Jeff Gill Patrick Hunt

Patrick Hunt Kelly Slater

Kelly Slater Mark V Wiley

Mark V Wiley Kristine Setting Clark

Kristine Setting Clark Margaret Littman

Margaret Littman David Simkins

David Simkins Louis Stanislaw

Louis Stanislaw Tyler Vanderweele

Tyler Vanderweele Melissa Cheyney

Melissa Cheyney Andrew Shapland

Andrew Shapland Deanne Howell

Deanne Howell Naomi Feil

Naomi Feil Eli Boschetto

Eli Boschetto Andrew Collins

Andrew Collins Kelly Skeen

Kelly Skeen David Lloyd Kilmer

David Lloyd Kilmer Barbara Klein

Barbara Klein Peter Dewhurst

Peter Dewhurst Garrett Grolemund

Garrett Grolemund Breanna Lam

Breanna Lam Jiichi Watanabe

Jiichi Watanabe Apsley Cherry Garrard

Apsley Cherry Garrard Keith Siragusa

Keith Siragusa Daphne Adler

Daphne Adler Ernest Shackleton

Ernest Shackleton Simon G Thompson

Simon G Thompson Jo Frost

Jo Frost Jon Dunn

Jon Dunn 1st English Ed Edition Kindle Edition

1st English Ed Edition Kindle Edition Peter Cossins

Peter Cossins Bobby Blair

Bobby Blair Michael Clarke

Michael Clarke Andrew Weber

Andrew Weber Jacqueeia Ferguson

Jacqueeia Ferguson Kerri Maniscalco

Kerri Maniscalco Kristi K Hoffman

Kristi K Hoffman Herbert Feigl

Herbert Feigl Tony Horton

Tony Horton Lenora Ucko

Lenora Ucko Andrew Maraniss

Andrew Maraniss Michaela Stith

Michaela Stith Elizabeth Wenk

Elizabeth Wenk James D Long

James D Long Suzie Cooney

Suzie Cooney Mark Brazil

Mark Brazil Jay Cassell

Jay Cassell Emily A Duncan

Emily A Duncan Dervla Murphy

Dervla Murphy Fata Ariu Levi

Fata Ariu Levi Greg Midland

Greg Midland Bernard Cornwell

Bernard Cornwell Dustyn Roberts

Dustyn Roberts Porter Fox

Porter Fox Betty Crocker

Betty Crocker Naomi Scott

Naomi Scott Leigh Calvez

Leigh Calvez Alan Jacobs

Alan Jacobs Heather Job

Heather Job 7th Edition Kindle Edition

7th Edition Kindle Edition Dr Brenda Stratton

Dr Brenda Stratton John Gookin

John Gookin Brian Kent

Brian Kent Dalai Lama

Dalai Lama Jong Chul Ye

Jong Chul Ye Stephen P Anderson

Stephen P Anderson Joseph E Garland

Joseph E Garland Oscar Wegner

Oscar Wegner Tony Hernandez Pumarejo

Tony Hernandez Pumarejo Mark Gregston

Mark Gregston Leonardo Trasande

Leonardo Trasande Harry Middleton

Harry Middleton Robert Ferguson

Robert Ferguson 006 Edition Kindle Edition

006 Edition Kindle Edition Mary H K Choi

Mary H K Choi Olszewski Marie Erin

Olszewski Marie Erin Kevin Paul

Kevin Paul A C Davison

A C Davison Deepak Chopra

Deepak Chopra Michael Alvear

Michael Alvear Gregory J Privitera

Gregory J Privitera Tom Mchale

Tom Mchale Amy Chua

Amy Chua Duy Tran

Duy Tran Patrick Viafore

Patrick Viafore Gershon Ben Keren

Gershon Ben Keren Bookrags Com

Bookrags Com Joseph Chilton Pearce

Joseph Chilton Pearce Skye Genaro

Skye Genaro Andrea Wulf

Andrea Wulf Pat Dorsey

Pat Dorsey Lee Smolin

Lee Smolin John Green

John Green J Michael Leger

J Michael Leger Christoph Delp

Christoph Delp Alessa Ellefson

Alessa Ellefson Natalie Smith

Natalie Smith Seymour Simon

Seymour Simon Nikki Grimes

Nikki Grimes Eric Haseltine

Eric Haseltine Nathan Jendrick

Nathan Jendrick Tom Lyons

Tom Lyons Jacquetta Hawkes

Jacquetta Hawkes Mei Fong

Mei Fong 6th Edition Kindle Edition

6th Edition Kindle Edition Paul Johnson

Paul Johnson Brenda Dehaan

Brenda Dehaan Tok Hui Yeap Rd Csp Ld

Tok Hui Yeap Rd Csp Ld Nadav Snir

Nadav Snir Theodore X O Connell

Theodore X O Connell Kekla Magoon

Kekla Magoon Kerry Fraser

Kerry Fraser Tracy Gharbo

Tracy Gharbo Lareina Rule

Lareina Rule Elizabeth Sims

Elizabeth Sims Marty Bartholomew

Marty Bartholomew Sadie Radinsky

Sadie Radinsky Thomas Wentworth Higginson

Thomas Wentworth Higginson Robert Thurston

Robert Thurston P G Maxwell Stuart

P G Maxwell Stuart Wong Kiew Kit

Wong Kiew Kit Michael Romano

Michael Romano A R Bernard

A R Bernard Laura Bright

Laura Bright William Regal

William Regal Freda Mcmanus

Freda Mcmanus Dinokids Press

Dinokids Press Claire Sierra

Claire Sierra Anthony Arvanitakis

Anthony Arvanitakis Jason Curtis

Jason Curtis Theris A Touhy

Theris A Touhy Alden Jones

Alden Jones Mike Tyson

Mike Tyson Natasha Preston

Natasha Preston Terence N D Altroy

Terence N D Altroy Greta Solomon

Greta Solomon Martha Menchaca

Martha Menchaca Paul Martin

Paul Martin Brooklyn James

Brooklyn James Kristen Riecke

Kristen Riecke Kate Spencer

Kate Spencer Jordan Ifueko

Jordan Ifueko Paul Deepan

Paul Deepan Justin Hammond

Justin Hammond Sam Goulden

Sam Goulden Brennan Barnard

Brennan Barnard Whit Honea

Whit Honea Terence Grieder

Terence Grieder Bertolt Brecht

Bertolt Brecht Michael Geheran

Michael Geheran Russell Miller

Russell Miller Tami Lynn Kent

Tami Lynn Kent Heather Rain Mazen Korbmacher

Heather Rain Mazen Korbmacher Bree Moore

Bree Moore Rowan Ricardo Phillips

Rowan Ricardo Phillips Emma Lord

Emma Lord Samantha Lovely

Samantha Lovely Eva Feder Kittay

Eva Feder Kittay Shonna Slayton

Shonna Slayton Steve Kantner

Steve Kantner Subsequent Edition Kindle Edition

Subsequent Edition Kindle Edition Richard Bromfield

Richard Bromfield Michael W Ford

Michael W Ford Raymond Buckland

Raymond Buckland Ricki E Kantrowitz

Ricki E Kantrowitz Rick Gurnsey

Rick Gurnsey Muata Ashby

Muata Ashby Jasmine Greene

Jasmine Greene Robert Peter Gale

Robert Peter Gale Dan Wingreen

Dan Wingreen James Mcnicholas

James Mcnicholas Chris Stewart

Chris Stewart Jillian Dodd

Jillian Dodd Collins Gcse

Collins Gcse Gina Rae La Cerva

Gina Rae La Cerva Kennedy Achille

Kennedy Achille Bruno Latour

Bruno Latour 4th Edition Kindle Edition With Audio Video

4th Edition Kindle Edition With Audio Video Joe Oliver

Joe Oliver Justine Brooks Froelker

Justine Brooks Froelker Mohamed Elgendy

Mohamed Elgendy Richard Adams

Richard Adams Rifujin Na Magonote

Rifujin Na Magonote Christa Orecchio

Christa Orecchio Marcia Verduin

Marcia Verduin L Madison

L Madison Bryan Smith

Bryan Smith Sandra Mizumoto Posey

Sandra Mizumoto Posey Frank Sargeant

Frank Sargeant L S Boos

L S Boos Raymond H Thompson

Raymond H Thompson Jim Burns

Jim Burns Don Fink

Don Fink Monte Burch

Monte Burch Billie Jean King

Billie Jean King Robert S Mueller

Robert S Mueller Liesbet Collaert

Liesbet Collaert Caroline Porter Thomas

Caroline Porter Thomas Jozef Nauta

Jozef Nauta David Beaupre

David Beaupre E Bruce Goldstein

E Bruce Goldstein Eric I Karchmer

Eric I Karchmer Icon Digital Publishing

Icon Digital Publishing Dominik Hartmann

Dominik Hartmann Filipe Masetti Leite

Filipe Masetti Leite Tasha Dunn

Tasha Dunn Lois Duncan

Lois Duncan Carl J Sindermann

Carl J Sindermann Ulla Sarmiento

Ulla Sarmiento Bruce Watson

Bruce Watson Kyla Stone

Kyla Stone Bob Welch

Bob Welch George Pendle

George Pendle Jay Wilkinson

Jay Wilkinson Ellen Sue Turner

Ellen Sue Turner Lewis Henry Morgan

Lewis Henry Morgan Jason Miller

Jason Miller Nathalie Dupree

Nathalie Dupree Gianni La Forza

Gianni La Forza G I Gurdjieff

G I Gurdjieff Joe Cuhaj

Joe Cuhaj 2012th Edition Kindle Edition

2012th Edition Kindle Edition Roger Gordon

Roger Gordon Howard Mudd

Howard Mudd John Iceland

John Iceland Cassandra Johnson

Cassandra Johnson Lesli Richards

Lesli Richards 4th Edition Kindle Edition

4th Edition Kindle Edition Theodor W Adorno

Theodor W Adorno Meik Wiking

Meik Wiking Geoffrey West

Geoffrey West Jd Brown

Jd Brown Charles Seife

Charles Seife Heather Demetrios

Heather Demetrios Erika Bornman

Erika Bornman Eric Dominy

Eric Dominy Kenny Casanova

Kenny Casanova Susan Walker

Susan Walker Thomas R Baechle

Thomas R Baechle 1st Edition Kindle Edition

1st Edition Kindle Edition Andre Norton

Andre Norton Karen Myers

Karen Myers Dan Falk

Dan Falk Vibrant Publishers

Vibrant Publishers Donna M Mertens

Donna M Mertens Juno Dawson

Juno Dawson 2nd Edition Kindle Edition

2nd Edition Kindle Edition Riddleland

Riddleland John Hands

John Hands Oliver Theobald

Oliver Theobald Chris Dietzel

Chris Dietzel Diane Duane

Diane Duane Sarah Templeton

Sarah Templeton Mauricio Cabrini

Mauricio Cabrini Robert Bauval

Robert Bauval Manuel De La Cruz

Manuel De La Cruz A C Grayling

A C Grayling Michael S Gazzaniga

Michael S Gazzaniga Gregory Collins

Gregory Collins Lin Pardey

Lin Pardey Grant Thompson

Grant Thompson Linda A Roussel

Linda A Roussel Ellen Levitt

Ellen Levitt 3rd Edition Kindle Edition

3rd Edition Kindle Edition Edyta Roszko

Edyta Roszko Aubrey Clayton

Aubrey Clayton Broccoli Lion

Broccoli Lion John Coleman

John Coleman Bill Reif

Bill Reif Patricia O Quinn

Patricia O Quinn Terrence Real

Terrence Real Andrew G Marshall

Andrew G Marshall Sam Irwin

Sam Irwin Alec Crawford

Alec Crawford Bernard Rosner

Bernard Rosner Stefan Hofer

Stefan Hofer Nick Winkelman

Nick Winkelman Diamond Wilson

Diamond Wilson Iris Bohnet

Iris Bohnet Robert Pondiscio

Robert Pondiscio Karl Morris

Karl Morris Adam Silvera

Adam Silvera Syougo Kinugasa

Syougo Kinugasa Jeremy Desilva

Jeremy Desilva 5th Edition Kindle Edition

5th Edition Kindle Edition A Digger Stolz

A Digger Stolz Sheryl Crow

Sheryl Crow Sherry Monahan

Sherry Monahan 2005th Edition Kindle Edition

2005th Edition Kindle Edition Steven D Levitt

Steven D Levitt Emma Dalton

Emma Dalton Elizabeth Bradfield

Elizabeth Bradfield Edward Frenkel

Edward Frenkel Elizabeth D Hutchison

Elizabeth D Hutchison Jonathan Ross

Jonathan Ross Jean Clottes

Jean Clottes Cynthia Bourgeault

Cynthia Bourgeault Leon Anderson

Leon Anderson Susan E Cayleff

Susan E Cayleff Debra Barnes

Debra Barnes Robert Venditti

Robert Venditti Starley Talbott

Starley Talbott Chris Lehto

Chris Lehto

Light bulbAdvertise smarter! Our strategic ad space ensures maximum exposure. Reserve your spot today!

Seth HayesRemarkable True Story of Survival at Sea: A Tale of Courage, Resilience, and...

Seth HayesRemarkable True Story of Survival at Sea: A Tale of Courage, Resilience, and... Darren NelsonFollow ·7.5k

Darren NelsonFollow ·7.5k Lucas ReedFollow ·19.5k

Lucas ReedFollow ·19.5k Joshua ReedFollow ·19.6k

Joshua ReedFollow ·19.6k Cade SimmonsFollow ·14.3k

Cade SimmonsFollow ·14.3k Terry PratchettFollow ·15.8k

Terry PratchettFollow ·15.8k Don ColemanFollow ·10.4k

Don ColemanFollow ·10.4k Branden SimmonsFollow ·14.3k

Branden SimmonsFollow ·14.3k Gordon CoxFollow ·13.4k

Gordon CoxFollow ·13.4k

Finn Cox

Finn CoxA Comprehensive Guide for Budding Inventors and Backyard...

For those with a restless mind and a...

Forrest Reed

Forrest ReedThe Ultimate Shopper's Guide to Purchasing Weight Lifting...

Are you looking...

Dillon Hayes

Dillon HayesThe Chemical Choir: Unveiling the Enchanting Symphony of...

In the enigmatic realm of science, where...

Ryūnosuke Akutagawa

Ryūnosuke AkutagawaStumbling Thru: Hike Your Own Hike

In the realm of outdoor adventures,...

Terry Pratchett

Terry PratchettUnlock Your Math Skills: A Comprehensive Guide to Chenier...

Math plays a vital role in...

4.4 out of 5

| Language | : | English |

| File size | : | 6969 KB |

| Text-to-Speech | : | Enabled |

| Enhanced typesetting | : | Enabled |

| Word Wise | : | Enabled |

| Print length | : | 469 pages |

| Lending | : | Enabled |

| Screen Reader | : | Supported |